Grounding ChatGPT-like systems in the real world with Wolfram Alpha

Published:

In this blog post, we will explore the potential of integrating ChatGPT with a computational knowledge engine like Wolfram Alpha. In his recent blog post Wolfram Alpha as the Way to Bring Computational Knowledge Superpowers to ChatGPT, Stephen Wolfram describes how ChatGPT excels in the “human-like parts” of language processing but may struggle with precise answers. By connecting ChatGPT with Wolfram Alpha and its vast computational knowledge, we aim to bridge this gap and provide ChatGPT with the “grounding” into the real world that large language models like GPT are missing. We will create reports with real data and visuals to showcase the potential of this integration. Let’s get started!

Wolfram Alpha and GPT-3 collaborate to create a report with data and visuals..

GitHub repo : https://github.com/landajuela/gpt_wolframalpha

Medium post : Medium post

Before we start, you will need to get an API key from Wolfram Alpha and OpenAI. Go to Wolfram Alpha and OpenAI to get your API keys. Add your keys to your environment variables as :

export OPENAI_API_KEY="YOUR_OPENAI_API_KEY"

export WOLFRAMALPHA_API_KEY="YOU_WOLFRAMALPHA_API_KEY"

After that, you can run the following cell to import the necessary libraries.

pip3 install wolframalpha

pip3 install openai

Now, you can get started with the Jupyter notebook. Import packages.

from pprint import pprint # pretty print

import requests # for making HTTP requests

import os # for getting environment variables

import urllib.parse # for encoding the query

import openai # openai API

import wolframalpha # wolframalpha API

import matplotlib.pyplot as plt # for plotting

from PIL import Image # for image manipulation

import textwrap # for wrapping text

Get the API keys from your environment variables and set them to variables.

# Read the API key from the environment variable

openai_app_id = os.environ['OPENAI_API_KEY']

wolframalpha_app_id = os.environ['WOLFRAMALPHA_API_KEY']

Make the WolframAlpha client. This will be used to query Wolfram Alpha for data and visuals.

def wolframAlpha_client(query: str, includeplot : bool = False) -> str:

""" Get the answer from WolframAlpha

Args:

query (str): The query to ask WolframAlpha

Returns:

str: The answer from WolframAlpha

"""

# Encode the query

query = urllib.parse.quote_plus(query)

# Make the request

query_url = f"http://api.wolframalpha.com/v2/query?" \

f"appid={wolframalpha_app_id}" \

f"&input={query}" \

f"&output=json"

if includeplot :

query_url += f"&includepodid=Plot"

# Get the response

report_json = requests.get(query_url).json()

# Return the answer

return report_json

Make the OpenAI client. This will be used to query OpenAI for the GPT-3 completion.

def gpt_client(prompt: str) -> str:

""" Get the answer from GPT-3

Args:

query (str): The query to ask GPT-3

Returns:

str: The answer from GPT-3

"""

## Call the API key under your account (in a secure way)

openai.api_key = openai_app_id

response = openai.Completion.create(

engine="text-davinci-003", # the model

prompt = prompt, # the prompt

temperature = 0.0, # temperature : the higher the temperature, the more random the output

top_p = 1, # the top_p, 1 means no sampling

max_tokens = 1264, # the max number of tokens to generate

frequency_penalty = 0, # the frequency penalty

presence_penalty = 0 # the presence penalty

)

# Return the answer

return response.choices[0].text

Now we can start the fun part! We will start by using the Wolfram Alpha client to get the raw data and visuals for a given query. After some post-processing (the tools are available in this Github repo), we can use the information to create a prompt for GPT-3 to generate an elaborated response. Finally, we will merge the response from GPT-3 and the images from Wolfram Alpha to create a report.

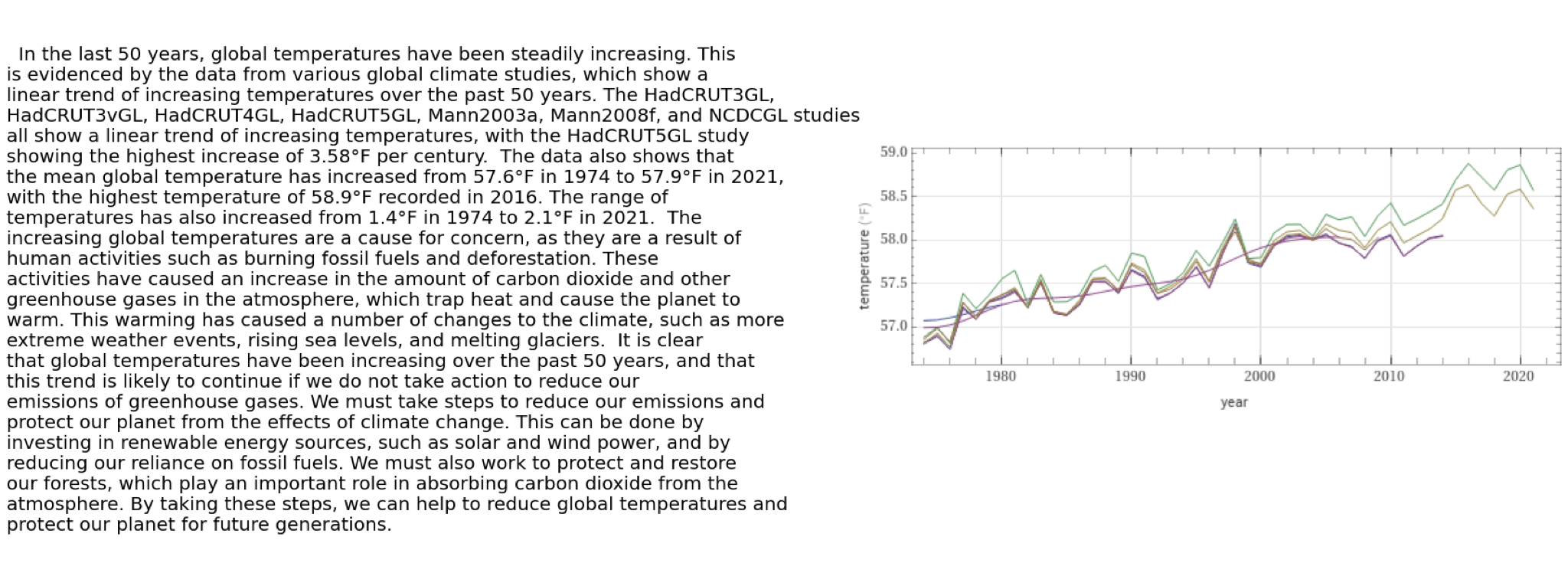

query = 'analyze global temperature in the last 50 years'

report_json = wolframAlpha_client(query)

report_prompt = wolframAlpha_report(report_json)

prompt = query.capitalize() + ".\n" + "Write an essay.\n" + \

"Use this data:\n" + report_prompt["report"] +\

"\nDo not show tables."

gpt_output = gpt_client(prompt)

show_text_and_image(gpt_output, report_prompt["imgs_files"])

Wolfram Alpha and GPT-3 collaborate to create a report with data and visuals..

Enjoy!