Neural-Guided Search for Scientific Discovery

Published:

In recent years, the field of scientific discovery has seen a surge of interest in the application of machine learning techniques. One promising approach is Deep Symbolic Optimization (DSO), a computational framework for scientific discovery that treats the discovery problem as a sequential decision-making task. In this blog post, we provide an overview of the DSO framework and its applications to scientific discovery.

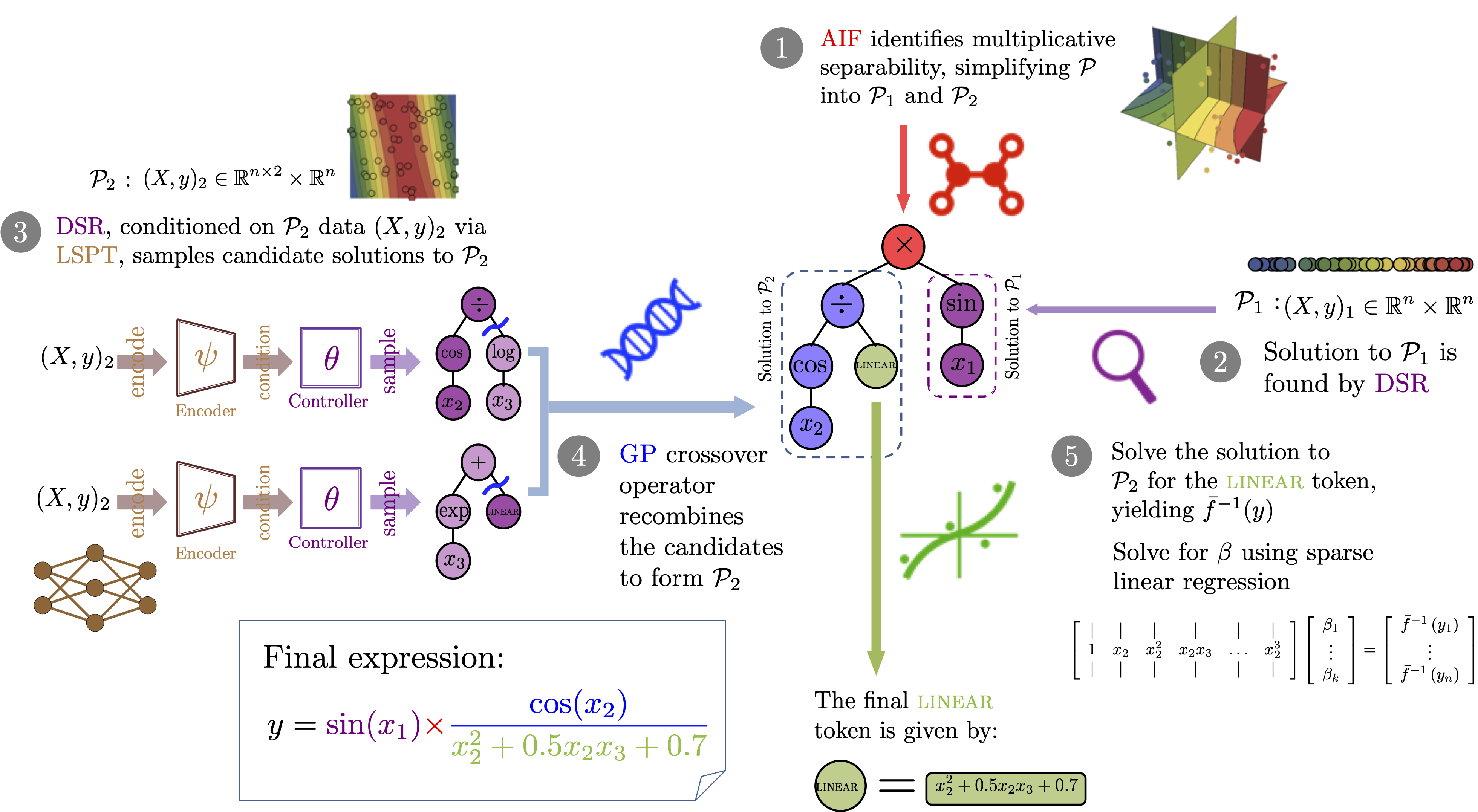

DSO learns a probabilistic model over the space of candidate designs, such as mathematical expressions, control policies, and amino acid sequences. This probabilistic model is parameterized by a generative neural network and is optimized to allocate the search effort in the most promising regions of the design space. The optimization process combines gradient-based techniques from the reinforcement learning literature with evolutionary search methods and/or local search techniques.

One of the key strengths of DSO is its versatility. It allows for the incorporation of in-situ constraints, advanced policy optimization techniques, pre-training the generative model on related discovery problems, and the use of powerful deep learning frameworks for online learning. Additionally, DSO can be provided with additional knowledge in the form of priors or additional representations from Large Language Models that provide better traction and guidance in the search process.

The DSO framework can be used to discover mathematical expressions and can be combined with other search techniques to improve the search process.

The versatility of DSO has been demonstrated through its successful application to a variety of problems, such as the discovery of mathematical models of data and the discovery of interpretable policies in stochastic control problems. In the case of mathematical discovery problems, DSO has been shown to achieve state-of-the-art performance in terms of accuracy and interpretability in numerous benchmark problems and competitions. In the case of control problems, DSO has been shown to discover policies that are competitive with state-of-the-art neural network policies but with the added benefit of being interpretable and explainable.

Current efforts in the development of DSO are focused on discovering antibody sequences that bind to specific targets based on large-scale molecular simulations, data-driven predictive models, and curated experimental datasets. In this blog post, we have provided an overview of the DSO framework and its applications and discussed future directions for this promising approach to scientific discovery.

References (in chronological order)

- Landajuela et al. 2022 A Unified Framework for Deep Symbolic Regression. NeurIPS 2022. Paper

- Da Silva et al. 2021 Leveraging language models to efficiently learn symbolic optimization solutions (ALA) Workshop @ AAMAS 2022 Paper

- Mundhenk et al. 2021 Symbolic Regression via Neural-Guided Genetic Programming Population Seeding. NeurIPS 2021 Paper

- Landajuela et al. 2021 Discovering symbolic policies with deep reinforcement learning. ICML 2021. Paper

- Landajuela et al. 2021 Improving exploration in policy gradient search: Application to symbolic optimization. Math-AI @ ICLR 2021. Paper

- Petersen et al. 2021 Incorporating domain knowledge into neural-guided search via in situ priors and constraints AutoML @ ICML 2021. Paper

- Kim et al. 2021 Distilling Wikipedia mathematical knowledge into neural network models. Math-AI @ ICLR 2021. Paper

- Petersen et al. 2021 Deep symbolic regression: Recovering mathematical expressions from data via risk-seeking policy gradients. ICLR 2021. Oral Paper

- Kim et al. 2020 An interactive visualization platform for deep symbolic regression. IJCAI 2020. Paper